OCI-GovCloud Migration: Copy from S3 to OCI

We are migrating from AWS to Oracle Cloud Infrastructure (OCI) during the fall/winter of 2023. Our checklist for success involves the following:

- Multi-domain custom URL that accurately permit users to log into the correct applications and allow developers the access that they need for database tools including APEX

- Migrate a database pump file from AWS to OCI

- Install a database pump file on OCI into specific schemas

- Have functioning applications (kind of obvious, huh?)

- Be able to send email from Oracle APEX to users

The objective during this stage of our migration involves migrating the database pump file from AWS to OCI.

What Did Work

This process is awkward, slow, and rather manual. As a positive, it is entirely transparent too. I do NOT care that it is awkward, slow, and rather manual because we have to do each schema just once. And we have to do 1 production schema twice (once to practice and configure and once on the go-live weekend).

Success ranks higher than efficient when the task is not repeated. Again, got comments, write an article and share your experiences. Two experienced professionals spent 2 full work days experimenting, and failing before finding success. I’ll review the failures below.

Each to their Strength

AWS CLI

The AWS CLI works brilliantly well and is robust, but installs best on operating systems that AWS loves such as the Linux versions that AWS supports on their EC2 instances. Get that configured with your credentials and boom, you can copy files from an EC2 instance to an AWS S3 bucket. Perfect.

AWS CLI did not work with Oracle’s Object Storage. Our failures included:

could not connect to endpoint URL401/authorization

My colleague, Eli Duvall, and I worked very hard to follow this article from Ron Ekin’s article on OCI Object Storage using AWS CLI We came close. Our instance is built within FedRamp/GovCloud so there are more security constraints. Maybe that is a reason?

What success did we have? We could copy a large database pump file (dmp) from an EC2 instance to an AWS S3 bucket.

OCI CLI

We tried to install OCI CLI on the AWS EC2 instance. What a failure. It appeared that our Linux version on AWS was not supported by Oracle’s CLI. Hummm?

What does OCI CLI install well on? Correct. On an Oracle Linux server located at OCI. So we did that. We could copy and list files on Object Storage Buckets from the Linux Command line.

The Gap

AWS CLI worked well within AWS.

OCI CLI worked well within OCI.

What we needed was to install either OCI CLI on an AWS server or we needed to install AWS CLI on an Oracle Linux server. We failed. Errors and time-wasting articles robbed us of hours. We finally installed a third-party app on our Oracle Linux server called s3cmd.

https://www.howtogeek.com/devops/how-to-sync-files-from-linux-to-amazon-s3

This worked great.

The Workflow

Step 1: Database Pump

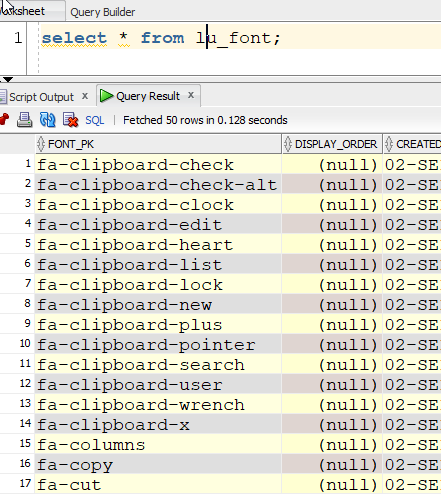

Find the folder/directory where the backup files (DMP) will land. First use a query to find the directory and it’s folder:

select * from all_directories;

For me, the desired directory is:

data_pump_dir => /oracle/admin/proddb/dpdump/

On your AWS Linux or Microsoft server that hosts the Oracle database, run your database pump command.

sudo su - oracle

cd /oracle/admin/proddb/dpdump

expdp fred/secret_password dumpfile=my_app_20231107.DMP logfile=my_app_20231107.log schemas=MY_APPThis file now sits in your designated Oracle directory. We navigated to this folder and confirmed the contents with ll, ls, or dir depending on your Operating System.

Step 2: Copy file to AWS S3

With AWS CLI installed and configured make sure that you can do some basic commands such as

And yes our credential file uses a profile. That profile name is the same name as the AWS user that we set up. The access key id and the secret access key are located in the file ~/.aws/credential (which is easily edited with ye olde vi or your favorite tool).

aws --version

aws s3api list-objects-v2 --bucket sp-log-test --profile apex-s3-userIf your AWS CLI is operational and your basic tests worked, then copy your dump file to the desired AWS S3 bucket. Please modify the pathing and filenames to match your needs. You may need a profile statement if you have multiple profiles set up.

aws s3 cp /oracle/admin/proddb/dpdump/my_app_20231107.DMP s3://the_bucket_name/my_app_20231107.DMP --profile my_profileSuccess? Does the file exist on AWS S3 using your browser? Is the file size the same? Good.

Log off of your lovely Linux server. Your done with 1 database pump from 1 schema.

Step 3: OCI CLI

Log into an EC2 instance running Oracle Linux at OCI. Do the clicking and such. Don’t care must about the server. Small and disposable is the key. When we’re done with our migration, we don’t plan on having any EC2 instances.

OCI CLI was installed but the configuration file did not exist. I went to my personal user profile in OCI and asked for an API key. I pulled down my private and public keys storing them locally. I copied the profile that has the fingerprint and all that loveliness. I did a vi on ~/aws/credential

[DEFAULT]

user=ocid1.user.oc2..aaaazzzzzzzzzzzzzzzzzzzzzzzzzz5sa

fingerprint=e7:8a:5d:1b:z1:07:30:3e:e1:a4:g2:a0:c8:i5:e8:2a

tenancy=ocid1.tenancy.oc2..aazzzzzzzzzzzzzzzzzzzzaz42anlm5jiq

region=us-langley-1

key_file=/home/opc/.oci/cm_2023-11-08T18_50_02.746Z.pem

We did a few basic tests to confirm functionality.

oci os bucket list -c ocid1.compartment.oc2..aaaazzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzlqk7la

-- SUCCESS

oci os object get -bn mucket-20231102-1133 --file - --name docs.json

-- SUCCESS

oci os object get -ns whooci --bucket-name mucket-20231102-1133 --file /home/opc/fakeinvoice.pdf --name fakeinvoice.pdf

-- SUCCESS

oci os object list -ns whooci --bucket-name mucket-20231102-1133 --all

-- SUCCESSWe can now copy files from Object Storage to the Linux server. YAY.

At this point we declared that we were “walking the dog”. In other words, the dog was not walkin’ us around. We were in charge.

Step 4: AWS CLI/ s3cmd

We entirely failed at trying to install AWS CLI on this server. We pivoted (after a long time) to run with s3cmd. That installed nicely. Prior to jumping into this, you will need your AWS S3 credentials (see Step 2 above). Best to follow the instructions in https://www.howtogeek.com/devops/how-to-sync-files-from-linux-to-amazon-s3

wget https://sourceforge.net/projects/s3tools/files/s3cmd/2.2.0/s3cmd-2.2.0.tar.gz

tar xzf s3cmd-2.2.0.tar.gz

cd s3cmd-2.2.0

sudo python setup.py install

s3cmd --configureWith the configure option, we keyed in my super secret credential for AWS and my AWS user. Then we tested a few basic commands:

s3cmd ls

-- success, listed the stuff.

s3cmd get s3://my-bucket-test/yum.log-20190101 /home/opc/yum.log-20190101

-- copied a file. successStep 5: Copy file from AWS S3 to Linux Server

We successfully copied an 11GiB file from AWS S3 to the Linux server sitting at OCI.

s3cmd get s3://my-bucket-test/myapp_20231107.DMP /home/opc/myapp_20231107.DMP

-- success, 449s for 11G / 7.48minWhile it did take 7.5 minutes, we had already invested 16 hrs in failures (2 people failing hard for 2 work days).

Step 6: Copy file from Linux Server to OCI Object Storage Bucket

“To each their own”, that was the solution. So we now used the OCI CLI to put the file in a bucket. We sensed success instantly when the process broken our file in to 84 parts. Given we were “local” to OCI, the transfer took just more than half the time that the copy from AWS had to take.

oci os object put -ns whooci --bucket-name mucket-20231102 --file /home/opc/myapp_20231107.DMPIt took about 4 minutes to transfer the file.

Step 7: Copy file from OCI Object Storage Bucket to Oracle’s DATA_PUMP_DIR

The file that we need are located on the OCI bucket and we need them in the Oracle database directory designed for data pump files. This is typically the data_pump_dir. To accomplish this you will use the SQL command line for the command dbma_cloud.get_object.

If you do not yet have credentials setup in the Autonomous Database, then you’ll need to set those up.

- go to OCI

- Identity and Security

- Domains then click default domain (bottom of the domains table, should be a blue link)

- On the left side of the screen, click Users, select your name/username

- Again, on the bottom half of the screen on the left side under Resources, select Auth Tokens

- Click Generate Token button, give it a name, then click Generate Token button again

- Copy the authentication cod

or

- Select Profile (top right person icon)

- Choose My Profile from the list

- On the bottom half of the screen on the left side under Resources, select Auth Tokens

- Click Generate Token button, give it a name, then click Generate Token button again

- Copy the authentication code

- both ways you need to generate and copy the token

begin

dbms_cloud.create_credential (

credential_name => 'OBJ_STORE_CRED_ME', --give the credential a recognizable name

username => 'me@example.com', --use your OCI username

password => 'superSecr3t' --auth token from steps above

);

end;After credentials are established, you get Get Object

--use get_object code with credentials and specified directory

BEGIN

DBMS_CLOUD.GET_OBJECT(

credential_name => 'OBJ_STORE_CRED_ME',

object_uri => 'https://objectstorage.us-langley-1.oraclegovcloud.com/n/exampleoci/b/mybucket/o/myapp_20231107.DMP',

directory_name => 'DATA_PUMP_DIR');

END;Using the select statement, monitor the import of the file until the file size matches your target file size. There is no notification that the file is complete. You can watch it grow.

select * from dbms_cloud.list_files('DATA_PUMP_DIR');Step 8 – Import the Data Dump

This is in a different article. Click here

What Did Not Work

We spent 2 days exploring solutions, articles, and technology that failed on us including suggestions from Oracle technical support. There maybe better, easier, and smoother techniques. If so, write an article and share them.

Challenges

When we created our database backup (datapump or expdp) on our production commercial applications we encountered very large files. We ran into compatibility issues between Oracle and AWS. And more… (of course).

Copying a Big File/Migrating a Big File

Our first attempt was on database that tipped the scales at 11Gigabytes. This steps into the world as a “big file” and is not easily moved with standard techniques. It must be treated as a multi-part or some other means of dividing the file into smaller bits. The advertised technique for copying a file from AWS S3 to an Object Storage Bucket is with

DBMS_CLOUD.GET_OBJECT

When used with dbms_cloud.create_credential this worked so well, we thought we made it. We tested with small files because we are human, impatient and love success. This failed monstrously with big files.

OCI Object Storage

Object Storage Buckets do have a compatibility mode and are supposed to be compatible with AWS S3. I have written and maintained a PL/SQL AWS S3 API. It works modestly with OCI Object Storage, but not perfectly. I was not surprised to struggle for hours using the AWS Command Line Interface (CLI) trying to connect to OCI Object Storage to inventory objects in buckets and to move them.

Clone & Copy Utilities

We found numerous articles about clone and copy utilities. For this reason or that, we could not get these reliably installed and working on the various Linux servers.